Observability vs Monitoring vs Telemetry - Key Differences

The landscape of IT operations and system management is evolving rapidly. As systems become more complex, the need for comprehensive insights grows. Three key concepts — observability, monitoring, and telemetry — play crucial roles in modern IT environments. But what exactly are these concepts, and how do they differ? This article breaks down the distinctions and explores their interconnected roles in maintaining robust, efficient systems.

Quick Guide: Understanding the Trio

Before diving into the details, let's establish a quick overview of these three concepts:

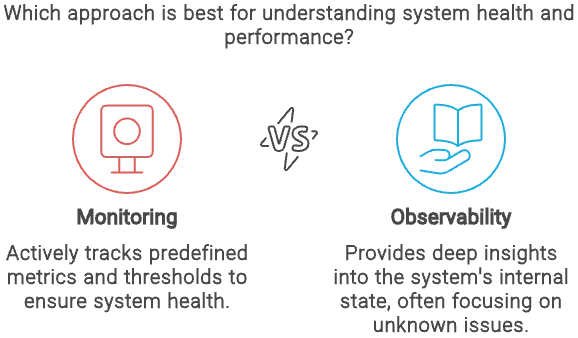

- Observability: The ability of a system to provide deep insights into its internal state, often focusing on unknown issues.

- Monitoring: Actively tracking predefined metrics and thresholds to ensure system health.

- Telemetry: The process of collecting and transmitting data from remote or inaccessible sources.

These concepts work together in modern IT environments to comprehensively view system performance and health. Telemetry feeds data to monitoring and observability tools, which then analyze this information to provide actionable insights.

In the following sections, you will learn about the various aspects of these concepts in detail. So, let’s start right away.

What is Observability?

Observability originates from control theory in engineering. It refers to how well you can understand a system's internal states from its external outputs. Observability provides insights into complex, distributed systems in IT, often revealing unknown issues or behaviors. It emphasizes understanding the entire system’s behavior.

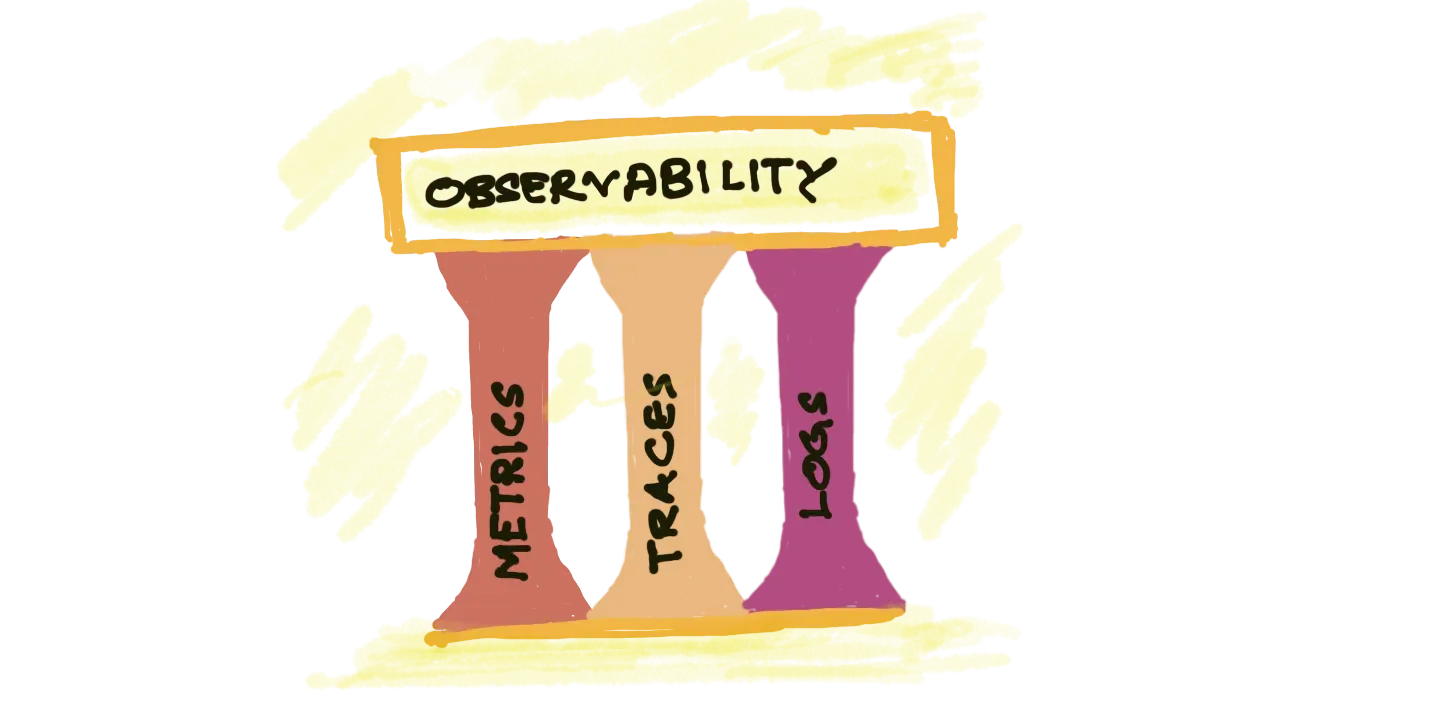

The three pillars of observability on which the foundation of observability lies are:

- Logs: These are detailed records of events within a system. It captures discrete events within the system, providing a record of all activities.

- Metrics: These are quantitative measurements of system performance. It provides data like response time or resource usage that are aggregated and analyzed over time.

- Traces: These are records of requests as they flow through distributed systems.

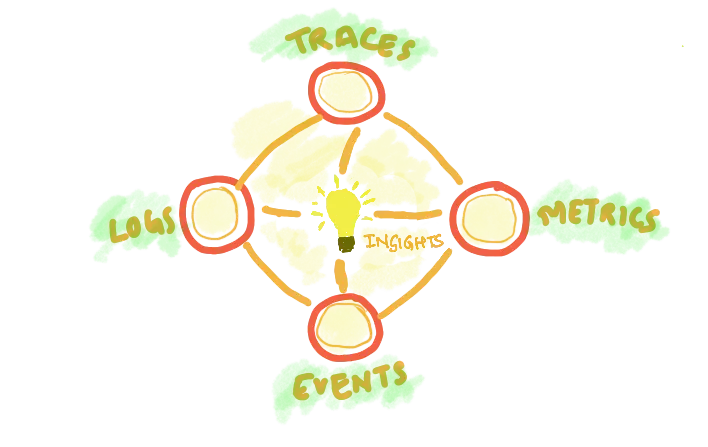

At SigNoz, we envision observability as a mesh or network rather than a set of pillars. This interconnected approach allows for more powerful correlations and faster problem-solving.

How Observability Works

Observability works through a series of steps that provide deep visibility into a system's internal state by continuously collecting, correlating, and analyzing data. Let’s look at each step one by one.

Collection of High-Cardinality Data

The first step in observability is the collection of high-cardinality data, such as unique User IDs, IP addresses, or transaction IDs. This data is critical for tracking specific events within a system at a detailed level. By capturing this information, observability tools enable teams to monitor individual components and interactions, essential for identifying hidden issues. High-cardinality data provides the context needed to understand the system's state and detect anomalies.

Correlation and Analysis of Diverse Data Points

Next, observability involves correlating and analyzing diverse data points, such as logs, metrics, and traces. This step allows teams to see how different parts of the system affect each other. For example, an increase in CPU usage might correlate with a rise in error logs. By analyzing these correlations, observability tools reveal patterns and relationships that help identify the root cause of issues, offering a comprehensive view of the system's behavior.

Use of Advanced Querying and Visualization Tools

The final step involves using advanced querying and visualization tools to interpret the collected data. These tools enable teams to filter, search, and analyze information quickly, making it easier to detect and diagnose problems. Visualization tools, like dashboards and heat maps, present data in an intuitive format, allowing teams to monitor the system's health in real-time and perform root cause analysis when issues arise. This step ensures that teams can act on the data effectively to maintain system reliability.

What is Monitoring?

Monitoring is the traditional approach to system oversight. It focuses on tracking known metrics and thresholds, alerting teams when predefined conditions are met.

Types of monitoring include:

- Infrastructure monitoring (CPU usage, memory, disk space)

- Application monitoring (response times, error rates)

- Network monitoring (bandwidth usage, latency)

Monitoring uses tools like Signoz to track system performance and health. These tools collect and visualize key metrics such as CPU usage, memory consumption, and network traffic. When these metrics exceed predefined thresholds, alerts are sent to system administrators, enabling them to address potential issues quickly. By applying tools like Signoz to various system components—such as infrastructure, applications, and networks—administrators can maintain a clear, real-time view of overall system health.

Monitoring is effective for known issues but has limitations in complex, dynamic environments. It often misses issues you didn’t anticipate or set up alerts for. As a reactive approach, it can overlook emerging problems that don’t fit existing metrics, making it less adaptable to new variables. This challenge is evident in modern, distributed systems with unpredictable component interactions, underscoring the need for observability, which provides a more advanced approach.

Challenges with Legacy Monitoring

There are many issues with legacy monitoring. Let’s look into these challenges in detail in this section.

- Scalability: As systems grow more complex, the number of metrics to monitor increases exponentially. Systems evolve to include many microservices and the complexity of monitoring increases exponentially. Traditional monitoring tools can not efficiently handle the distributed nature of microservices. The volume of data generated by each microservice coupled with the need to monitor their interactions, can overwhelm these tools.

- Alert fatigue: Too many alerts can lead to important issues being overlooked. Traditional monitoring relies on static thresholds, often generating multiple alerts. These multiple alerts may not indicate any real issues. For example, a brief increase in CPU usage will trigger an alert even if it doesn’t impact system performance. With time, these unnecessary alerts can lead to alert fatigue, and system administrators start to ignore these alerts. This increases the probability of neglect of genuinely critical alerts, leading to severe system outages or performance degradation.

- Lack of context: Isolated metrics often fail to provide the full picture of system behavior. For example, monitoring CPU usage without correlating it with application performance or user experience data can provide an incomplete picture of system health. Henceforth, the lack of context leads to difficulty in diagnosing and resolving issues effectively. This is due to the issue that administrators must manually put together information from different sources. In complex systems, this increases the time of troubleshooting and is an inefficient problem-resolution method.

- Unknown Issue Handling: Legacy monitoring is difficult in addressing unknown issues as it is highly dependent on predefined metrics and thresholds making it effective only for anticipated errors. In case of any unexpected error, legacy monitoring struggles to detect it. This can lead to ignorance of critical issues till after their escalation.

What is Telemetry?

Telemetry involves the collection and transmission of data from remote or inaccessible sources. In IT operations, it gathers logs, metrics, traces, and events, providing insights into system performance and behavior. Logs capture specific events within a system, metrics offer quantifiable data over time, traces track requests across services, and events record state changes. This varied data is crucial in cloud-native and distributed systems, where manual monitoring is impractical.

Telemetry serves as the foundation for both observability and monitoring. Monitoring uses telemetry to track predefined metrics, while observability leverages it for deeper insights, especially in handling unexpected issues. This makes telemetry essential for real-time oversight, sophisticated analysis, and troubleshooting, ensuring reliable, high-performance IT environments.

Types of Telemetry Data in Observability

In the context of observability, telemetry provides a wealth of data:

- Application Performance Data: This is a critical type of telemetry that provides response times, error rates, and resource usage data at the application level. This data aids teams in understanding how applications perform in real-time and can be important for identifying bottlenecks or areas of optimization.

- Infrastructure Metrics: This type of telemetry metrics focuses on CPU, memory, and disk usage across servers and containers. The various underlying hardware and cloud resources that support applications are monitored thoroughly. The effective monitoring of these metrics ensures that the infrastructure remains robots and is capable of supporting the demands placed on it by applications.

- User Behavior Analytics: This is the most recent addition in telemetry dealing with user interactions, session durations, and feature usage. It is usually employed in user-centric applications. This data tracks how users interact with a system which includes page views, clicks, session durations, and more. The understanding of user behavior aids teams in optimizing user experience and provides early indications of issues like drops in engagement or performance problems.

- Network Traffic Information: This is a vital type of telemetry that provides insight into data flow across the network and tracks various parameters like Bandwidth usage, packet loss, and latency between system components. It is important for identifying and resolving connectivity issues, ensuring data is efficiently and securely transmitted between different parts of a system, especially in distributed architectures.

This diverse data set allows observability tools to build a comprehensive picture of system behavior.

Key Differences: Observability vs Monitoring vs Telemetry

While these concepts are interconnected, they serve distinct roles in IT operations:

Scope and Depth of Insights

- Observability: Provides deep and comprehensive insights into system behavior, allowing for a thorough understanding of the system, even in unforeseen or unexpected scenarios.

- Monitoring: Focuses on real-time visibility into predefined metrics and thresholds. It ensures the system operates within expected parameters, offering insights into specific, known aspects of performance.

- Telemetry: Collects raw data that underpins both observability and monitoring. While it doesn’t provide insights directly, it supplies the data required for these practices.

Approach to Problem Detection and Resolution

- Observability: Proactive and investigative, allowing for the exploration of unexpected issues. It supports an exploratory approach to problem detection and resolution by analyzing various data sources.

- Monitoring: Reactive, designed to alert operators when predefined thresholds are breached. It quickly identifies known issues based on set parameters, though it may not catch unforeseen problems.

- Telemetry: Supplies the data needed for both observability and monitoring to detect and resolve issues. It acts as the source of information that these systems use to identify and address problems.

Data Collection and Analysis Methodologies

- Observability: Involves analyzing diverse data sources, including logs, metrics, and traces, to understand the overall state of the system. This comprehensive analysis is key to gaining deep insights.

- Monitoring: Tracks specific metrics and thresholds to monitor system health. It focuses on predefined indicators crucial for maintaining system stability and performance.

- Telemetry: Involves gathering various types of data—such as logs, metrics, traces, and events—for further analysis. This collected data is essential for both observability and monitoring to function effectively.

Applicability to Modern Software Architecture

- Observability: Essential for complex, distributed systems to understand interactions and root causes. It is particularly valuable in modern architecture where systems are dynamic and interdependent.

- Monitoring: Suitable for traditional and simpler systems where known parameters apply. While still valuable in modern contexts, it may not capture the full complexity of distributed systems.

- Telemetry: Crucial for both observability and monitoring, especially in dynamic and cloud-native environments. It provides the data needed to manage and understand modern software architectures effectively.

How to Implement Effective Observability with SigNoz

SigNoz is an open-source observability platform that combines the power of monitoring, observability, and telemetry. It offers:

- End-to-end tracing of requests across microservices

- Custom dashboards for visualizing metrics and logs

- Alerts based on complex conditions and trends

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

By using SigNoz, you can implement comprehensive observability practices, leveraging the strengths of monitoring and telemetry in a unified platform.

The Future of Observability, Monitoring, and Telemetry

The future of observability, monitoring, and telemetry is set to evolve significantly, driven by advancements in technology, increasing complexity in IT environments, and the growing demand for real-time insights. The future trends include:

- AI Integration: Machine learning algorithms will enhance anomaly detection and predictive analytics.

- Unified Platforms: Tools that combine observability, monitoring, and telemetry will become more prevalent.

- User Experience Focus: Metrics will increasingly tie directly to user experience and business outcomes.

- Automation: Automated remediation based on observability insights will become more common.

Key Takeaways

- Observability provides deep system insights beyond predefined metrics.

- Monitoring focuses on known issues and thresholds.

- Telemetry is the foundation, providing data for both monitoring and observability.

- Integration of all three is crucial for modern IT operations.

- Tools like SigNoz offer comprehensive solutions for today's complex environments.

FAQs

What's the main difference between observability and monitoring?

Observability provides deep, exploratory insights into system behavior, often revealing unknown issues. Monitoring focuses on tracking predefined metrics and thresholds, alerting on known issues.

Can you have observability without telemetry?

No, telemetry is essential for observability. Telemetry provides the raw data that observability tools analyze to generate insights.

How does telemetry contribute to both monitoring and observability?

Telemetry collects and transmits data from various system components. This data feeds both monitoring tools (for tracking known metrics) and observability platforms (for deeper analysis and insight generation).

Is it necessary to implement all three: observability, monitoring, and telemetry?

For comprehensive system oversight, especially in complex environments, implementing all three is highly beneficial. They work together to provide a complete picture of system health and performance.