Datadog Pricing Main Caveats Explained [Updated for 2026]

Datadog is one of the most popular and widely used observability platforms out there. With a market capitalisation of 40 billion USD, it stands as the leader of companies in the observability domain. The product is great, and it offers a wide array of features. But one common complaint against Datadog is its pricing and billing practices.

In this blog, we will cover these things:

- Main caveats in Datadog’s pricing

- A simplified breakdown of Datadog core product pricing

- Introducing SigNoz - a simpler and predictable Datadog alternative

If you’re thinking of adopting Datadog as your observability tool, you should definitely be aware of these caveats or pain points in their billing practices.

Main caveats in Datadog pricing explained

The core of the confusion with Datadog's pricing lies in its multi-dimensional, usage-based model. You're not just paying for one thing; you're charged across various products, each with its own pricing metric. This can lead to unpredictable bills that are difficult to forecast and control.

Here are some of the most common pain points users experience:

Per-Host Billing Punishes Modern Architectures

Datadog's pricing for Infrastructure and APM is often tied to the number of hosts you're monitoring. In an era of dynamic, containerized environments and microservices, this model can feel outdated and punitive. We've seen teams resort to using larger, more expensive instances just to minimise their Datadog host count, a clear sign of optimising for the observability tool rather than for their own architecture.

The price for core services like Infrastructure monitoring starts at $15 per host/month and APM & continuous profiler starts at $31 per host/month.

The definition of a "host" is broad (a VM, a Kubernetes node, an Azure App Service Plan, etc.), and you are billed using a high-water mark system. Datadog measures your host count every hour, discards the top 1% of hours with the highest usage, and then bills you for the entire month based on the next highest hour. This model protects you from very brief, anomalous spikes but penalizes you for any period of sustained high usage.

Let’s understand this with an example,

You run an application on 50 hosts. For a 5-day marketing event, you scale up to 200 hosts to handle the increased load. At the end of the month, Datadog ignores the top 1% of hours (roughly 7 hours of your peak usage) but sees that for the rest of that 5-day period, your host count was consistently 200.

Your APM bill for that month will be calculated based on the high-water mark of 200 hosts, not your typical 50.

Your Bill: $6,200 (200 hosts x $31/month)

Your Expectation (based on average): Closer to $2,260

You pay for your peak week for the entire month, forcing you to make architectural choices based on monitoring costs, not application needs.

One other issue with Datadog’s host-based pricing model is its container trap, as host-based pricing is especially dangerous in a containerised environment. The recommended setup is one Datadog Agent per host (e.g., per Kubernetes node). If you mistakenly configure an agent to run in every container/pod, each of those pods will be billed as a separate host. A simple misconfiguration on a 50-node cluster running hundreds of pods could increase your bill by 10x or more.

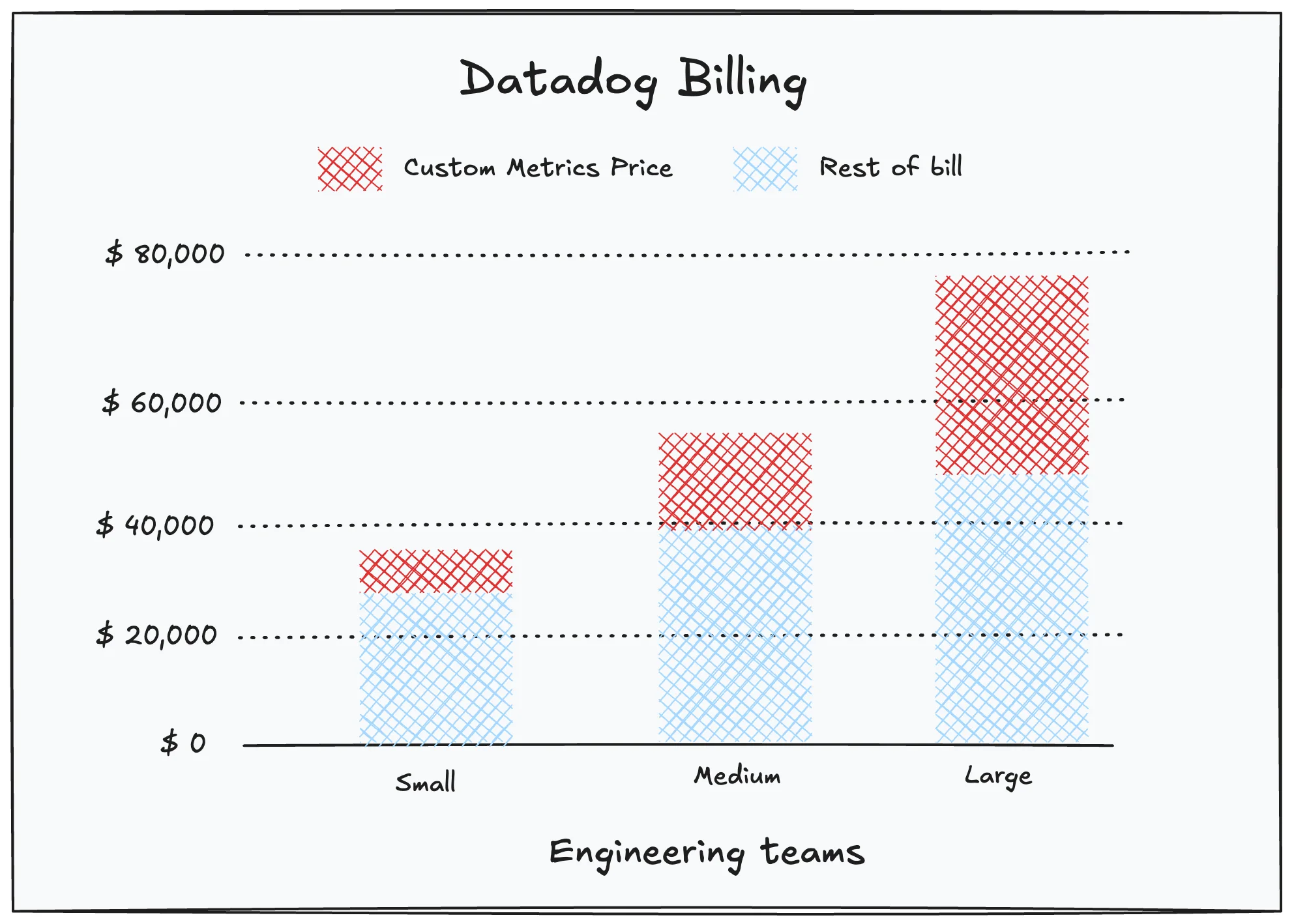

The 'Custom Metric Tax' on Modern Observability

This is often the most unpredictable and painful part of a Datadog bill. Datadog charges a premium for "custom metrics," which are any metrics not from a standard integration. This includes virtually all metrics you'd create for your own application, and critically, all metrics sent via the open-source standard OpenTelemetry (OTel) are billed as custom.

At scale, the cost of custom metrics in Datadog’s billing can constitute up to 52% of your total billing.

The cost is driven by cardinality—the number of unique combinations of a metric name and its tags (host, endpoint, customer_id, etc.). Adding a single tag with many unique values can cause your metric count, and therefore your bill, to explode.

The Price:

- Pro Plan Allotment: 100 custom metrics per host.

- Overage Cost: $5.00 per 100 custom metrics/month (for metrics over your allotment, billed on the monthly average).

Billing Impact Example:

You want to monitor API latency with a metric named api.request.latency. You have 10 API endpoints, and you want to track latency by the HTTP status code (e.g., 200, 404, 500) and by the customer tier (free, pro, enterprise).

Let's calculate the potential number of unique metrics (timeseries):

10 endpoints ** 3 status codes ** 3 customer tiers = 90 unique custom metrics

This is for just one metric name. If you add another tag with high cardinality, like

customer_id, the count can instantly jump into the thousands, triggering significant overage fees.The "Metrics without Limits™" Caveat:

To manage this, Datadog offers "Metrics without Limits™," which lets you control which tags are indexed (and thus searchable). While this can lower costs, it introduces its own complexity and a new charge:

- Indexed Metrics: You are billed the standard overage rate for the metrics you choose to index with specific tags.

- Ingested Metrics: You also pay a separate, smaller fee ($0.10 per 100 metrics) for all the original metric combinations you sent to Datadog before your tags were filtered.

Essentially, you are either paying a high price for full visibility or juggling two different charges to reduce it, adding another layer of complexity to your bill.

You Pay Twice for Log Management

Datadog's log management pricing is a classic "two-part tariff" designed to look inexpensive at first glance but can become costly as you actually use the service. You are billed on two separate axes—first to collect your logs (Ingest), and then a second, much higher fee to make them searchable (Index).

- The Price:

- Ingest: $0.10 per GB to collect, process, and archive your logs.

- Index: $1.70 per million log events to make them searchable for 15 days.

- The Problem: This model forces you to pay twice for the same data if you want it to be useful for debugging. You must pay to collect every gigabyte of logs, but to get real-time query and alerting capabilities, you must also pay the much higher per-event indexing fee. This creates a strong financial disincentive to log comprehensively.

Imagine your application generates a modest 200 GB of logs in a month, which equates to roughly 100 million log events.

- Ingest Cost: You are first charged $20 (200 GB x $0.10) just to get the logs into Datadog's system.

- Indexing Cost: To make these logs searchable for your developers during an incident, you then pay an additional $170 (100 million events x $1.70).

Your total bill is $190. To cut costs, you might decide to only index 20% of your logs. While this lowers your bill, it means that during an outage, 80% of your potentially critical log data is not immediately searchable, defeating the purpose of a centralized logging platform. You are forced to choose between cost and visibility right when you need visibility the most.

A Simplified Breakdown of Datadog's Core Product Pricing

To give you a clearer picture, here’s a simplified overview of how Datadog prices its main offerings.

(Note: Prices are based on publicly available information for annual billing as of June 2025 and may be subject to change. It's always best to consult Datadog's official pricing page for the latest figures.)

A Simplified Breakdown of Datadog's Core Product Pricing

| Product | Common Pricing Metric | Typical Starting Price (Annual Billing) | Key Considerations |

|---|---|---|---|

| Infrastructure | Per host per month | $15 | Costs increase with container usage; high-water mark billing applies. |

| APM & Continuous Profiler | Per host per month | $31 | Can become very expensive in distributed or microservices architectures. |

| Log Management | Per GB Ingested & Per Million Events Indexed | $0.10 / GB (Ingest) + $1.70 / Million Events (Index) | You pay twice for logs you want to search, making comprehensive logging costly. |

| Custom Metrics | Per 100 metrics per month (beyond allotment) | $5.00 | A major source of hidden costs, especially for users of OpenTelemetry. |

| Real User Monitoring (RUM) | Per 1,000 sessions per month | $1.50 | Directly scales with your user traffic, can be expensive for high-traffic sites. |

| Synthetic API Tests | Per 10,000 test runs per month | $5.00 | Cost depends on the frequency and number of locations you test from. |

| Synthetic Browser Tests | Per 1,000 test runs per month | $12.00 | More expensive tests that simulate a full user journey in a browser. |

| Database Monitoring | Per database host per month | $70 | A premium product; costs add up quickly if you have many database instances. |

| Cloud Security Management | Per host per month | $15 | Adds security monitoring capabilities to your infrastructure observability. |

| Application Security Management | Per service per month | $33 | Analyzes code-level vulnerabilities within your applications. |

Why SigNoz is a Simpler, More Predictable Datadog Alternative

If you're looking for a powerful observability solution without the complex and often surprising pricing, SigNoz is an excellent alternative to Datadog. Here's why:

OpenTelemetry Native - No vendor lock-in in your code

SigNoz is built from the ground up to be OpenTelemetry native. This means we fully leverage OTel's semantic conventions, providing deeper, out-of-the-box insights. Unlike Datadog, we don't charge you extra for "custom metrics" when you're using OpenTelemetry. This fundamental difference means you can embrace open standards without the fear of a massive bill.

We've also recently launched features that double down on our OTel-native approach, including:

- LLM Observability: Monitor your LLM applications (OpenAI, Anthropic, LangChain) with out-of-the-box dashboards.

- Trace Funnels: Intelligently sample and analyze traces to focus on what's important.

- External API Monitoring: Gain visibility into the performance of third-party APIs your application depends on.

- Out-of-the-box Messaging Queue Monitoring: Effortlessly monitor popular queuing systems.

Flexible Hosting Options for Every Stage of Growth

We believe you shouldn't be locked into a single deployment model. SigNoz offers a range of options to meet your needs as you scale:

- SigNoz Cloud: A fully-managed, scalable solution for teams that want to focus on their core business without the overhead of managing an observability platform.

- SigNoz Enterprise: For organizations with strict data residency or privacy requirements, we offer a self-hosted enterprise edition (bring-your-own-cloud or on-premise) with dedicated support and advanced security features.

- SigNoz Community: A self-hosted, open-source version that's perfect for getting started and for teams with the capability to manage their own infrastructure.

Simple, Transparent, Usage-Based Pricing

Our pricing model is designed to be straightforward and predictable. We primarily charge based on the volume of data you send us. It’s as simple as $0.3 per GB of ingested logs, $0.3 per GB of ingested traces, and $0.1 per mil samples.

There are no "weird" pricing levers like per-host charges that force you to alter your architecture. Our goal is a simple, scalable model that grows with you.

With SigNoz, you get:

- No surprise bills: Our pricing is easy to understand and forecast.

- Cost-effective at scale: As your data volume grows, our pricing remains competitive.

- Freedom to architect your systems as you see fit: We don't penalize you for using modern, dynamic infrastructure.

The Bottom Line

While Datadog is a feature-rich platform, its complex and often punitive pricing model can be a significant drawback for many teams. Even then Datadog can be suitable for companies looking for a lot of features in one tool starting from APM to cloud siem and digital experience monitoring. But there will be significant pain and loss of valuable engineering bandwidth if you ever want to move out of Datadog due to its unpredictable costs.

If you're tired of unpredictable bills and want an observability solution that embraces open standards and offers transparent pricing, you can consider SigNoz. Our automated migration tool makes switching from Datadog seamless by translating your dashboards in minutes without manual rebuilding.