Mastering Microservices Logging - Best Practices Guide

Microservices architectures have revolutionized software development, enabling scalability and flexibility. However, they also introduce complexities in system monitoring and troubleshooting. Effective logging is crucial for maintaining visibility and diagnosing issues in these distributed environments. This comprehensive guide explores best practices for microservices logging, helping you navigate the challenges and implement robust logging strategies. From standardized formats to advanced techniques, you'll learn how to master logging in microservices and enhance your system's observability.

What is Microservices Logging and Why is it Critical?

Microservices architecture is a software design approach in which a large application is built as a collection of small, independent services. The goal of microservices architecture is to improve the scalability and maintainability of a software system by making it easier to develop, test, and deploy individual services independently.

One of the main benefits of microservices architecture is that it allows for a more granular and fine-grained approach to building and evolving a software system. This can make it easier to make changes to individual parts of the system without affecting the entire system.

Microservices logging refers to the practice of capturing and managing log data in a distributed system composed of independent, loosely coupled services.

Importance of Logging in Microservices

Logging is critical in microservices architectures for several reasons:

- Distributed Debugging: Logs help trace requests across multiple services, essential for identifying the root cause of issues.

- Performance Monitoring: Log data provides insights into service performance and helps identify bottlenecks.

- Security Auditing: Logs are crucial for detecting and investigating security incidents across the distributed system.

- Compliance: Many industries require comprehensive logging for regulatory compliance.

The key differences between monolithic and microservices logging include:

- Volume: Microservices generate significantly more log data due to their distributed nature.

- Consistency: Maintaining consistent log formats across diverse services is challenging but essential.

- Correlation: Tracing requests across services requires sophisticated log correlation techniques.

Effective microservices logging directly impacts system observability—the ability to understand internal states based on external outputs. It enables faster troubleshooting, proactive issue detection, and informed decision-making for system improvements.

Essential Microservices Logging Best Practices

To implement effective logging in your microservices architecture, follow these best practices:

What to Log

Events and Transactions: Capture actions, occurrences, and business or system transactions to provide insights into the system's behavior. Errors: Log errors, exceptions, and stack traces to aid in troubleshooting and understanding failure points within the system.

Implement Standardized Log Formats

Use a consistent log format across all services to simplify log parsing and analysis. A standardized format should include:

- Timestamp

- Service name

- Log level

- Message

- Contextual data (e.g., user ID, request ID)

Example of a standardized log entry:

{

"timestamp": "2023-05-30T10:15:30.123Z",

"service": "user-service",

"level": "INFO",

"message": "User login successful",

"userId": "12345",

"requestId": "abc-123-xyz"

}

Use Log Levels

Use standardized logging levels across all services:

- DEBUG: Detailed information for debugging purposes

- INFO: General operational information

- WARN: Non-critical issues or unexpected events

- ERROR: Error conditions that require attention

- FATAL: Critical errors that may lead to service failure

Ensure all team members understand and correctly use these levels to maintain consistency.

Use Structured logging

Structured logging, particularly in JSON format, offers several advantages:

- Easier to parse and analyze programmatically

- Supports complex data structures

- Facilitates integration with log management tools

Implement structured logging in your services using libraries like Winston (Node.js) or Logback (Java).

Use an unique correlation ID per request

Imagine a situation where a large number of microservices produce millions of log entries every hour. If something odd happened, it would be challenging to identify the underlying cause.

An invaluable tool in this kind of circumstance is a correlation ID. For each request sent to a server, a correlation request ID would be inserted. The identifier is passed back and forth between each service needed to fulfill the request. If you're trying to debug a problem, the first thing you should do is locate the special identification that was given along with the request. Even better would be to use the special ID when handling errors in the error log.

Add contextual data in your logs

Make sure to include enough context in your logs to be able to understand the events that lead up to an error or debugging issue. This might include information such as the request URL, request parameters, and user ID.

Do not log sensitive data

Avoid logging personally identifiable information (PII). PII includes things like passwords, account numbers, and social security numbers. There is a privacy concern because it is possible that individual developers may need to examine logs for debugging needs. If your company wants to evaluate user behavior, take into account how certain types of sensitive data may be aggregated through logging to maintain user anonymity.

Provide informative application logs

The log should have all the information required to understand the error when it occurs. We can troubleshoot more effectively and more quickly the more information that is accessible from the microservices logs.

At the very least, logs need to contain the following details:

- Service name

- UserID

- Hostname

- Correlation ID (can be in the form of traceid)

- RequestID

- Time Stamp

- Overall duration (at the end of a request)

- Method name

- Call stack (line number of the log)

- Request Method

- Request URI

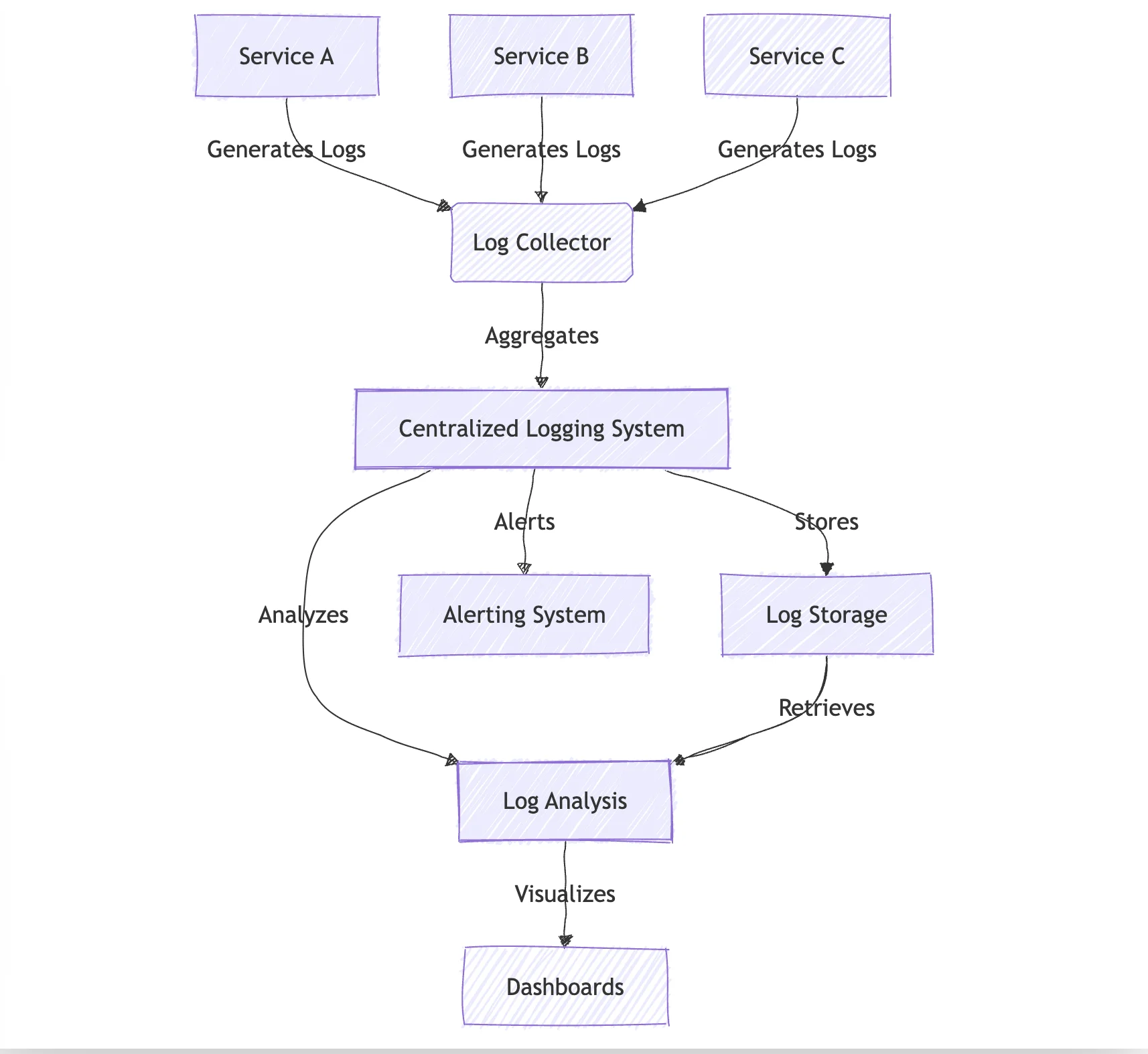

Centralized logging solution

In a microservices architecture, it can be difficult to keep track of logs if they are scattered across multiple services. Using a centralized logging solution such as SigNoz or Elastic can help you to collect, query, and view logs from all your services in one place. Logs analytics tool like SigNoz provide dashboards to visually present logs data in an easily digestible format.

Logging performance metrics

In addition to logging events and errors, consider also logging performance metrics such as response times and resource utilization. This will allow you to monitor the performance of your services and identify any potential issues.

The need for a Centralized Logging Service

A single centralized logging service that aggregates logs from all the services should be a preferred solution in a microservices architecture. In the software world, unique/unseen problems are not seldom, and we certainly do not want to be juggling through multiple log files or developed dashboards to get insights about what caused the same.

When one or more services fail, we need to know which service experienced an issue and why. It's also difficult to decipher the complete request flow in microservices. For instance, which services have been called? And in what sequence and frequency are that service called?

Benefits of centralized logging include:

- Unified view: Access logs from all services in one place

- Easier correlation: Simplifies tracing requests across services

- Advanced analysis: Enables complex queries and pattern recognition

- Improved security: Centralized access control and audit trails

Key features to look for in a log management solution:

- Scalability: Ability to handle high log volumes

- Real-time ingestion and search: Quick access to recent logs

- Advanced querying: Support for complex search queries

- Visualization: Dashboards and charts for log data analysis

- Alerting: Automated notifications for specific log patterns

Advanced Techniques for Effective Microservices Logging

Distributed Tracing

Distributed tracing provides end-to-end visibility of requests as they traverse multiple services. It helps identify performance bottlenecks and understand service dependencies.

Implement distributed tracing using tools like Jaeger or Zipkin. These systems work by propagating trace context between services and collecting timing data for each span of a request.

Example of adding tracing to a Node.js application using OpenTelemetry:

const { NodeTracerProvider } = require('@opentelemetry/node');

const { SimpleSpanProcessor } = require('@opentelemetry/tracing');

const { JaegerExporter } = require('@opentelemetry/exporter-jaeger');

const provider = new NodeTracerProvider();

const exporter = new JaegerExporter({ serviceName: 'my-service' });

provider.addSpanProcessor(new SimpleSpanProcessor(exporter));

provider.register();

// Your application code here

Log Aggregation and Correlation

Use log aggregation to collect and correlate logs from multiple services. This technique helps in understanding the flow of requests and identifying issues that span across services.

Implement log correlation by including correlation IDs in all log entries and using a centralized logging system to aggregate and analyze logs based on these IDs.

Log Sampling

In high-volume environments, log sampling can help manage the amount of data while still providing valuable insights. Implement intelligent sampling techniques that capture a representative subset of logs, including all error logs and a percentage of other log levels.

Example of log sampling in Python:

import random

def should_log(level):

if level in ['ERROR', 'FATAL']:

return True

return random.random() < 0.1 # 10% sampling for non-error logs

def log(level, message):

if should_log(level):

# Proceed with logging

pass

Automated Log Analysis and Alerting

Implement automated log analysis to detect patterns, anomalies, and potential issues. Set up alerts based on specific log patterns or thresholds to proactively address problems.

Use machine learning algorithms for advanced log analysis, such as clustering similar log messages or detecting anomalies in log patterns.

Integrating Observability in logs

If the application logs contained request context identifiers (such as trace ids, span ids, traceflags or user-defined baggage based on w3c trace context recommendation) it would result in a much richer correlation between logs and traces, as well as correlation between logs emitted by different components of a distributed system. This would make logs significantly more valuable in distributed systems.

But before we go further, let’s have brief overview of what is observability.

What is observability?

We believe the aim of observability is to solve customer issues quickly. Creating monitoring dashboards is useless if it can’t help engineering teams quickly identify the root causes of performance issues.

A modern distributed software system has a lot of moving components. So while setting up monitoring, you might not know what answers you would need to solve an issue. And that’s where observability comes into the picture.

Observability enables application owners to get answers to any question that might arise while debugging application issues.

Logs, metrics, and traces as pillars of observability

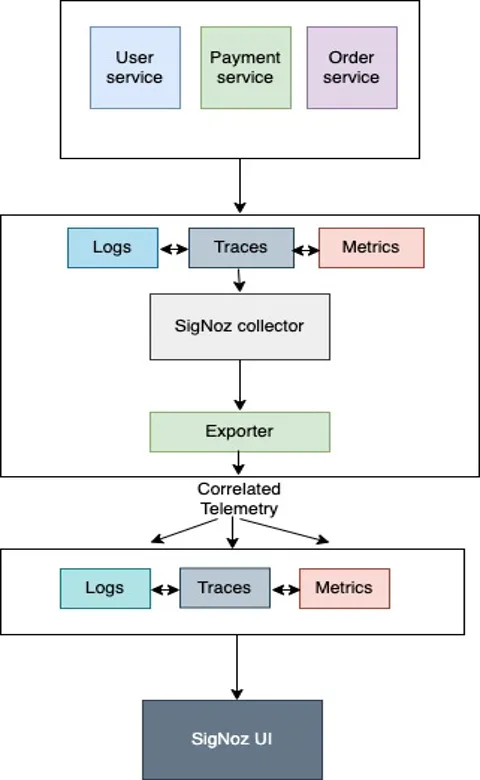

Logs, metrics, and traces are commonly referred to as three pillars of observability. These three telemetry signals when correlated can drive application insights faster.

For robust observability, having a seamless way to correlate your telemetry signals is critical. For example, if you see that the latency of a particular service is high at the moment, can you deep-dive into relevant logs quickly? Can you correlate your metrics with traces to figure out where in the request journey the problem occurred?

Now the question is how do we generate, collect, and store telemetry signals so that they can be easily correlated and analyzed together. That’s where OpenTelemetry comes into the picture.

OpenTelemetry is a set of API, SDKs, libraries, and integrations aiming to standardize the generation, collection, and management of telemetry data(logs, metrics, and traces). OpenTelemetry is a Cloud Native Computing Foundation project created after the merger of OpenCensus(from Google) and OpenTracing(From Uber).

The data you collect with OpenTelemetry is vendor-agnostic and can be exported in many formats. Telemetry data has become critical to observe the state of distributed systems. With microservices and polyglot architectures, there was a need to have a global standard. OpenTelemetry aims to fill that space and is doing a great job at it thus far.

SigNoz is built to support OpenTelemetry natively.

We emit logs, traces, and metrics in a way that is compliant with OpenTelemetry data models, and send the data through SigNoz collector, where it can be enriched and processed uniformly.

OpenTelemetry defines a log data model. The purpose of the data model is to have a common understanding of what a LogRecord is, what data needs to be recorded, transferred, stored, and interpreted by a logging system. Newly designed logging systems are expected to emit logs according to OpenTelemetry’s log data model.

Now let’s look at a practical example of correlating logs with traces.

How do you add Context information in logs in a simple Go Application?

We have implemented the correlation of logs and traces in a sample Golang Application.

We instrument our Go application to generate traces in the way described in this documentation.

We will further check on how we add the context info in logs. We use zap library for logging.

In order to add the trace context information such as traceID, spanID and traceFlags in the logs, we have implemented a logger wrapper that records zap log messages as events on the existing span that must be passed in a context as a first argument. It does not add anything to the logs if the context does not contain a span.

Step 1: We do an initial set up of zap logger.

func SetupLog() {

encoderCfg := zap.NewProductionEncoderConfig()

encoderCfg.TimeKey = "time"

encoderCfg.EncodeTime = zapcore.TimeEncoderOfLayout("2006-01-02T15:04:05")

encoderCfg.MessageKey = "message"

encoderCfg.CallerKey = zapcore.OmitKey

fileEncoder := zapcore.NewJSONEncoder(encoderCfg)

consoleEncoder := zapcore.NewConsoleEncoder(encoderCfg)

logFile, _ := os.OpenFile("application.log", os.O_APPEND|os.O_CREATE|os.O_WRONLY, 0644)

writer := zapcore.AddSync(logFile)

defaultLogLevel := zapcore.DebugLevel

core := zapcore.NewTee(

zapcore.NewCore(fileEncoder, writer, defaultLogLevel),

zapcore.NewCore(consoleEncoder, zapcore.AddSync(os.Stdout), defaultLogLevel),

)

logger = zap.New(core, zap.AddCaller(), zap.AddStacktrace(zapcore.ErrorLevel))

}

Step 2: Secondly, we wrap the zap logger and a context for use to add trace context information in the log messages.

type LoggerWithCtx struct {

*zap.Logger

context *context.Context

}

func Ctx(ctx context.Context) *LoggerWithCtx {

return &LoggerWithCtx{

Logger: logger,

context: &ctx,

}

}

func (l *LoggerWithCtx) logFields(

ctx context.Context, fields []zap.Field,

) []zap.Field {

span := trace.SpanFromContext(ctx)

if span.IsRecording() {

context := span.SpanContext()

spanField := zap.String("span_id", context.SpanID().String())

traceField := zap.String("trace_id", context.TraceID().String())

traceFlags := zap.Int("trace_flags", int(context.TraceFlags()))

fields = append(fields, []zap.Field{spanField, traceField, traceFlags}...)

}

return fields

}

func (log *LoggerWithCtx) Info(msg string, fields ...zap.Field) {

fieldsWithTraceCtx := log.logFields(*log.context, fields)

log.Logger.Info(msg, fieldsWithTraceCtx...)

}

Step 3: The services which intends to use this log method needs to pass the request context object which adds trace_id, span_id and trace_flags field to structured log messages. This option is only useful with backends that don't support OTLP and instead parse log messages to extract structured information.

import log "github.com/vabira200/golang-instrumentation/logger"

log.Ctx(r.Context()).Info("Order controller called", metadata...)

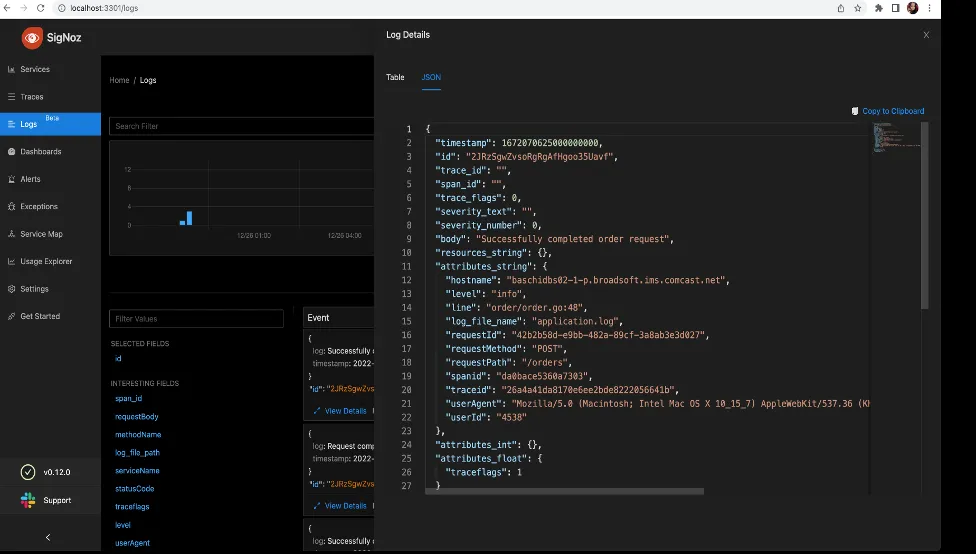

Step 4: With the integration of wrapper with the zap library, this is how our logs look like(with traceid, spanid and traceflags). This follows the log data model from OpenTelemetry. For comparison, let’s look at logs with and without the integration of the wrapper.

Before Integration:

{

"hostname": "baschidbs02-1-p.broadsoft.ims.comcast.net",

"level": "info",

"line": "order/order.go:48",

"requestId": "42b2b58d-e9bb-482a-89cf-3a8ab3e3d027",

"requestMethod": "POST",

"message": "Successfully completed order request",

"time":"2022-12-26T21:33:45",

"requestPath": "/orders",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"userId": "4538"

}

After Integration:

{

"hostname": "baschidbs02-1-p.broadsoft.ims.comcast.net",

"level": "info",

"line": "order/order.go:48",

"requestId": "42b2b58d-e9bb-482a-89cf-3a8ab3e3d027",

"requestMethod": "POST",

"message": "Successfully completed order request",

"time":"2022-12-26T21:33:45",

"requestPath": "/orders",

"spanid": "da0bace5360a7303",

"traceid": "26a4a41da8170e6ee2bde8222056641b",

"traceflags": 1,

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"userId": "4538"

}

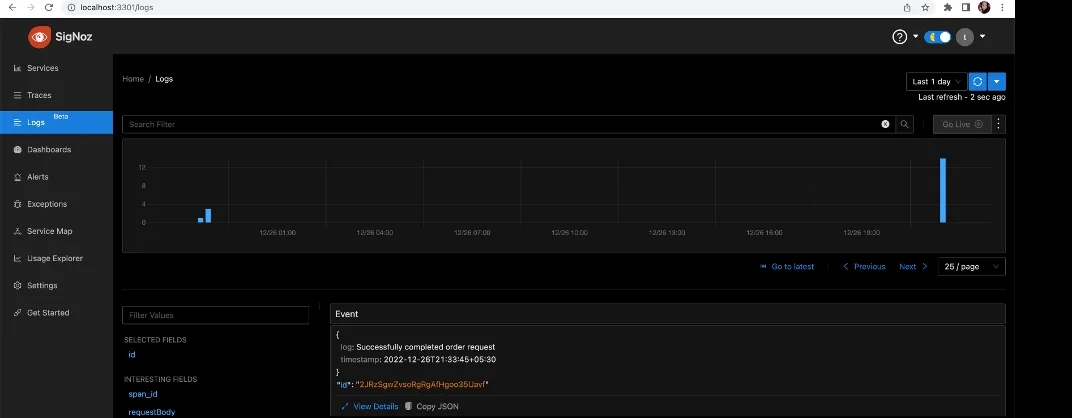

OpenTelemetry Log Collection with SigNoz

In this example, we will configure our application to write the logs to a log file. Then the SigNoz OpenTelemetry collector collects logs from the log file and exports them to clickhouselogsexporter which shows up the logs in the SigNoz UI.

Steps to collect Application Logs with OpenTelemetry

Clone the SigNoz GitHub repository:

Start the signoz server following the instructions:

git clone -b main <https://github.com/SigNoz/signoz.git>

cd signoz/deploy/

./install.sh

Or Setup SigNoz Cloud

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Modify the docker-compose.yaml file present inside deploy/docker by adding volumes for the application log file.

otel-collector:

image: signoz/signoz-otel-collector:0.88.11

command: ["--config=/etc/otel-collector-config.yaml"]

user: root # required for reading docker container logs

volumes:

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

- /var/lib/docker/containers:/var/lib/docker/containers:ro

- /<path_to_application_log_file>/application.log:/tmp/application.log

Add the filelog receivers in the otel-collector-config.yaml which is present inside deploy/docker

receivers:

filelog:

include: ["/tmp/application.log"]

start_at: beginning

operators:

- type: json_parser

timestamp:

parse_from: attributes.time

layout: '%Y-%m-%dT%H:%M:%S'

- type: move

from: attributes.message

to: body

- type: remove

field: attributes.time

Here we are collecting the logs and moving message from attributes to body using operators that are available. You can read more about operators here.

For more configurations that are available for filelog receiver please check here.

Next we will modify our pipeline inside otel-collector-config.yaml to include the receiver we have created above.

service:

....

logs:

receivers: [otlp, filelog]

processors: [batch]

exporters: [clickhouselogsexporter]

Now we can restart the OTel collector container so that new changes are applied, and the logs are read from the application log file. If you look at the traces tab, we can see the traces available with the traceID and span.

Now we need to switch to the logs tab and check for the equivalent log to the above trace.

We can see the complete log of the above trace with traceid, spanid and traceflags.

Security Considerations in Microservices Logging

Securing log data is crucial in microservices architectures. Implement these practices to ensure log security:

- Protect Sensitive Data: Avoid logging sensitive information like passwords or personal data. Use masking techniques for partial logging of sensitive fields.

- Encrypt Log Transmission: Use secure protocols (e.g., TLS) when transmitting logs from services to the central log management system.

- Secure Log Storage: Encrypt logs at rest and implement access controls to prevent unauthorized access.

- Implement Audit Trails: Log all access to the logging system itself to maintain an audit trail of who viewed or modified log data.

- Compliance Adherence: Ensure your logging practices comply with relevant data protection regulations like GDPR or CCPA.

Example of masking sensitive data in logs:

import re

def mask_sensitive_data(log_message):

# Mask credit card numbers

log_message = re.sub(r'\\b(?:\\d{4}[-\\s]?){3}\\d{4}\\b', 'XXXX-XXXX-XXXX-XXXX', log_message)

# Mask email addresses

log_message = re.sub(r'\\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\\.[A-Z|a-z]{2,}\\b', 'XXXX@XXXX.XXX', log_message)

return log_message

# Use this function before logging

logger.info(mask_sensitive_data("User email: john@example.com, CC: 1234-5678-9012-3456"))

Overcoming Common Microservices Logging Challenges

Microservices logging presents unique challenges. Here's how to address some common issues:

- Managing Log Volume:

- Implement log rotation and compression

- Use log sampling for high-volume services

- Set appropriate retention policies

- Ensuring Consistency Across Polyglot Services:

- Define and enforce logging standards across teams

- Use language-agnostic logging formats (e.g., JSON)

- Implement centralized logging configuration management

- Handling Containerized and Serverless Environments:

- Use container-aware logging drivers (e.g., Docker's json-file driver)

- Implement structured logging for easier parsing

- Leverage cloud-native logging solutions for serverless functions

- Troubleshooting Complex Service Interactions:

- Implement distributed tracing

- Use correlation IDs consistently across all services

- Leverage log aggregation and analysis tools for end-to-end visibility

Key Takeaways

Mastering microservices logging is essential for maintaining observable and manageable distributed systems. Remember these key points:

- Standardize log formats and implement structured logging for consistency

- Use correlation IDs and distributed tracing to track requests across services

- Centralize logs for easier management, analysis, and correlation

- Implement robust security measures to protect sensitive log data

- Leverage integrated observability tools like SigNoz for comprehensive monitoring

It is a difficult task to handle logs at scale. A microservices architecture emits millions of log lines per minute. By following these best practices and continuously refining your logging strategy, you'll be well-equipped to handle the complexities of microservices architectures and maintain highly observable, performant systems.

When your code ditches you, logs become your best friend!

FAQs

What are the main differences between monolithic and microservices logging?

The main differences include:

- Volume: Microservices generate more logs due to their distributed nature.

- Complexity: Correlating logs across services is more challenging in microservices.

- Consistency: Maintaining uniform logging practices across diverse services is difficult.

- Tools: Microservices often require specialized tools for log aggregation and analysis.

How can I implement distributed tracing in my microservices architecture?

To implement distributed tracing:

- Choose a tracing framework (e.g., Jaeger, Zipkin)

- Instrument your services to generate trace data

- Propagate trace context between services

- Set up a centralized collector to aggregate trace data

- Use a visualization tool to analyze traces

What security measures should I consider for microservices logging?

Key security measures include:

- Encrypting log data in transit and at rest

- Implementing access controls for log data

- Masking or excluding sensitive information from logs

- Maintaining audit trails for log access

- Ensuring compliance with data protection regulations

How does centralized logging benefit microservices architectures?

Centralized logging offers several benefits:

- Provides a unified view of logs from all services

- Simplifies log correlation and analysis

- Enhances security through centralized access control

- Enables advanced querying and visualization capabilities

- Facilitates easier troubleshooting and performance optimization

What is microservices logging and why is it important?

Microservices logging is the practice of capturing and managing log data in a distributed system composed of independent, loosely coupled services. It's crucial for maintaining visibility, diagnosing issues, monitoring performance, ensuring security, and achieving compliance in complex distributed environments.

What are the key differences between monolithic and microservices logging?

The main differences include:

- Volume: Microservices generate significantly more log data due to their distributed nature.

- Consistency: Maintaining consistent log formats across diverse services is challenging but essential.

- Correlation: Tracing requests across services requires sophisticated log correlation techniques.

- Complexity: Debugging and troubleshooting in a distributed system is more complex.

- Tools: Specialized tools for log aggregation and analysis are often required for microservices.

What are some best practices for microservices logging?

Key best practices include:

- Implement standardized log formats across all services.

- Use structured logging, preferably in JSON format.

- Utilize log levels consistently (DEBUG, INFO, WARN, ERROR, FATAL).

- Use unique correlation IDs for each request to trace across services.

- Include contextual data in logs (e.g., service name, user ID, request ID).

- Avoid logging sensitive information.

- Implement a centralized logging solution for easier management and analysis.

How can I implement distributed tracing in my microservices architecture?

To implement distributed tracing:

- Choose a tracing framework (e.g., Jaeger, Zipkin, or OpenTelemetry).

- Instrument your services to generate trace data.

- Propagate trace context between services using headers or metadata.

- Set up a centralized collector to aggregate trace data.

- Use a visualization tool to analyze and explore traces.

What security considerations should I keep in mind for microservices logging?

Important security considerations include:

- Encrypt log data both in transit and at rest.

- Implement strict access controls for log data.

- Mask or exclude sensitive information from logs.

- Maintain audit trails for log access and modifications.

- Ensure compliance with data protection regulations like GDPR or CCPA.

- Regularly review and update logging practices to address emerging security threats.

How does centralized logging benefit microservices architectures?

Centralized logging offers several benefits:

- Provides a unified view of logs from all services.

- Simplifies log correlation and analysis across the entire system.

- Enhances security through centralized access control and monitoring.

- Enables advanced querying and visualization capabilities.

- Facilitates easier troubleshooting and performance optimization.

- Supports better scalability and log retention management.

What is OpenTelemetry and how does it relate to microservices logging?

OpenTelemetry is an open-source observability framework that provides a standardized way to generate, collect, and manage telemetry data (logs, metrics, and traces) in distributed systems. It helps in creating a consistent approach to instrumentation across different services and technologies in a microservices architecture, making it easier to correlate logs with other observability data.

Related Posts