Logs Pipelines transform your raw logs into structured, queryable data before storage. Extract fields, parse JSON, normalize attributes, and enrich your logs from the SigNoz UI without redeploying your applications.

Why Use Logs Pipelines?

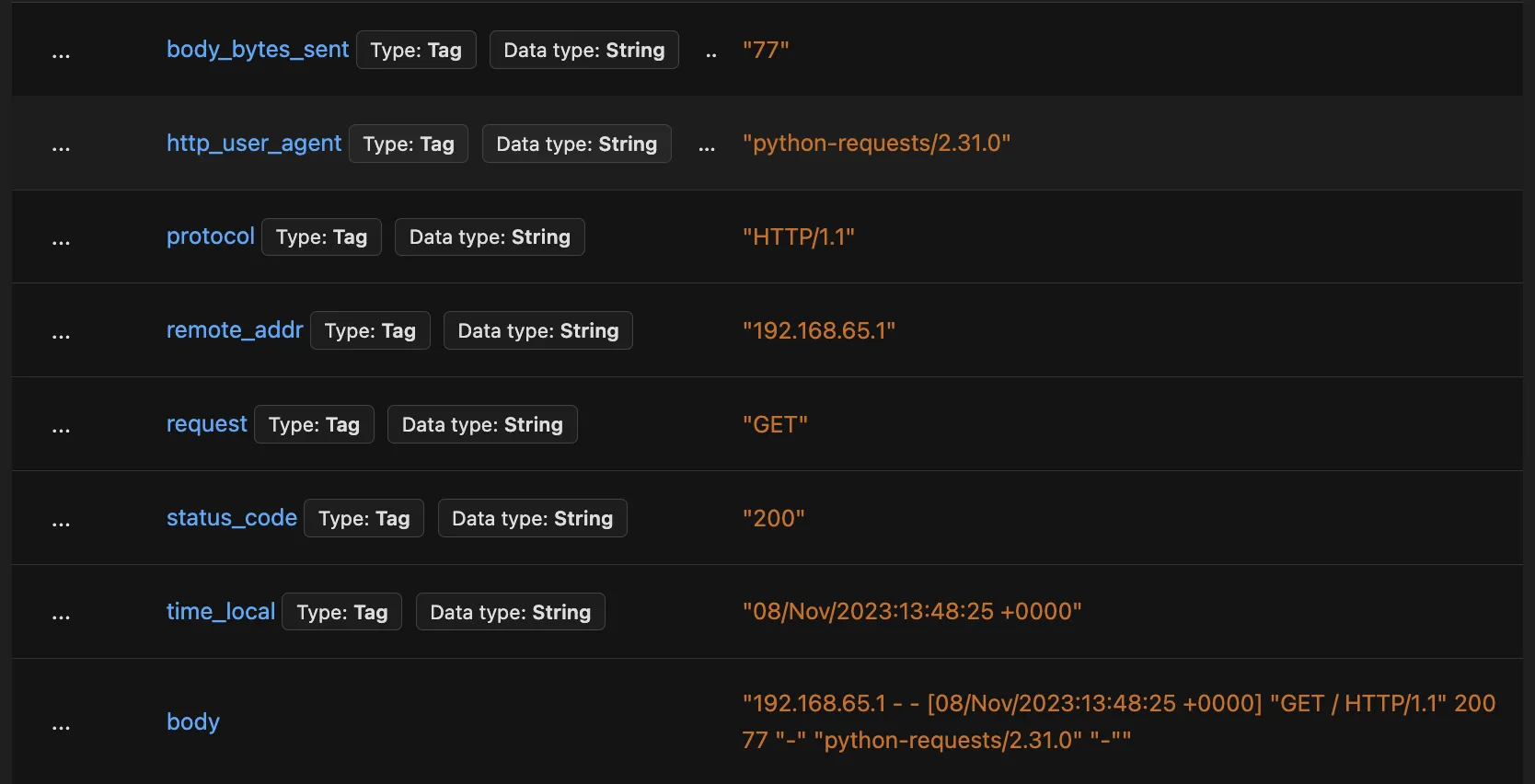

Raw logs contain valuable information, but it's often buried in unstructured text. Without parsing, you can only search the full log body, making filtering and aggregation difficult or impossible.

With Logs Pipelines, you can extract fields like user agent, status code, or IP address into structured attributes. These attributes become queryable columns you can filter, group by, and aggregate.

Once fields are extracted, you can build dashboards and reports like tracking requests by user agent or analyzing error rates by endpoint.

Getting Started

- Concepts — Understand pipelines and processors

- Logs Parsing — Learn to create pipelines and choose the right parser

Parsing Guides

- JSON Parser — Parse structured JSON log bodies

- Regex Parser — Extract fields using regular expressions

- Grok Parser — Use pre-defined patterns for common log formats

- Nested JSON — Flatten deeply nested JSON structures

Log Enrichment

- Timestamp Parsing — Extract timestamps from log messages

- Severity Parsing — Map log levels to OpenTelemetry severity

- Trace Correlation — Link logs to traces for end-to-end debugging

- Resource Attributes — Set service names from container metadata

Reference

- All Processors — Complete list of available log processors