Feature in Spotlight: Access Token Management & Onboarding [Day 5] 🐝

Welcome to SigNoz Launch Week Day 5!

Today’s feature in the spotlight is Access Token Management & Onboarding.** And as it is our last day, we have a bonus for you 🥳 - a chat with SigNoz CTO, Ankit Nayan.**

Let’s dive in.

Builders - Vishal & Chitransh

But first let’s meet the builders behind today’s features in spotlight.

Meet Vishal, our founding backend engineer who joined SigNoz in its early days. Vishal has spearheaded multiple initiatives at SigNoz, starting with the development of the trace module. At that time, Jaeger was the only open-source tracing alternative available, and Vishal led numerous innovations in the SigNoz APM, which our users have come to love.

Meet Chitransh, our dedicated DevRel, who passionately works to enhance the developer experience at SigNoz. With extensive experience working alongside developers, Chitransh has developed a keen eye for clean and clear documentation. And, with his insights on developer mindset, he is taking SigNoz onboarding to the next level.

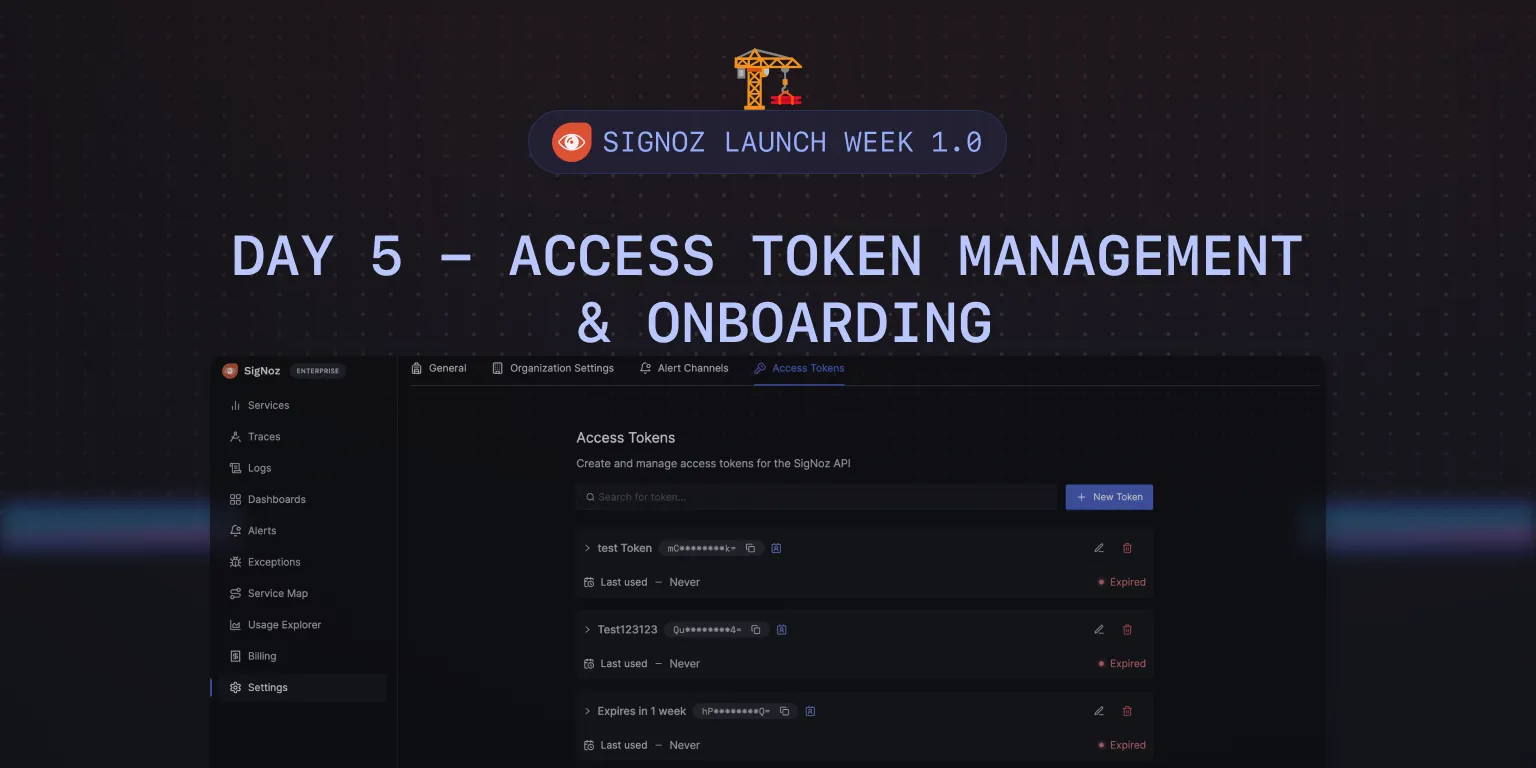

Introducing Access Token Management in SigNoz

Access Token Management was a popular requested feature by SigNoz users. Before we enabled it through our UI, users had to reach out to us via email. There are many interesting use cases that these tokens enable:

Customized Access Levels: Users needed a way to manage Access tokens that could have varying levels of access (e.g., viewer, editor, admin levels) to suit different roles within their teams.

Correlating Business Metrics with Observability Data: One significant use case involved correlating observability data from SigNoz with business metrics from other analytics platforms. Users wanted to demonstrate how improvements in system performance (e.g., reduced API latencies) directly impacted business outcomes, such as increased sales or user engagement.

Custom Alerts and Automation: Users sought to create custom alerts based on specific, non-standard criteria and automate responses to certain conditions.

Programmatic Access and Automation: The ability to programmatically access SigNoz data for custom reporting, alert management, or operational automation was a critical requirement. Users wanted to write scripts or use automated tools to fetch data, create alerts, or manage system resources, providing a more flexible and responsive infrastructure.

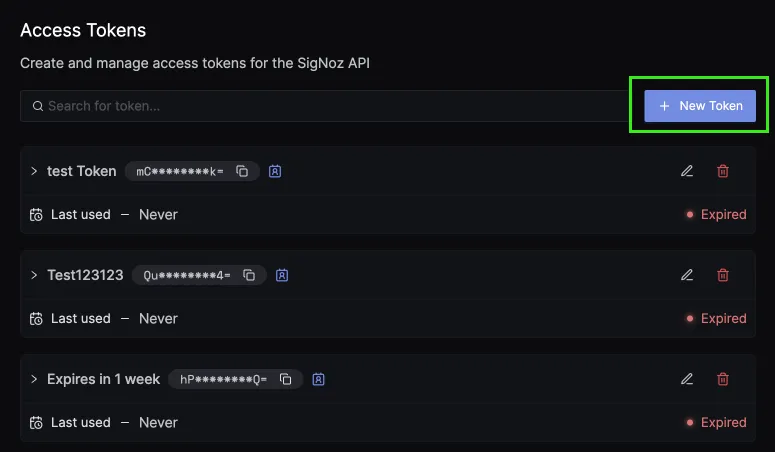

Using Access Token

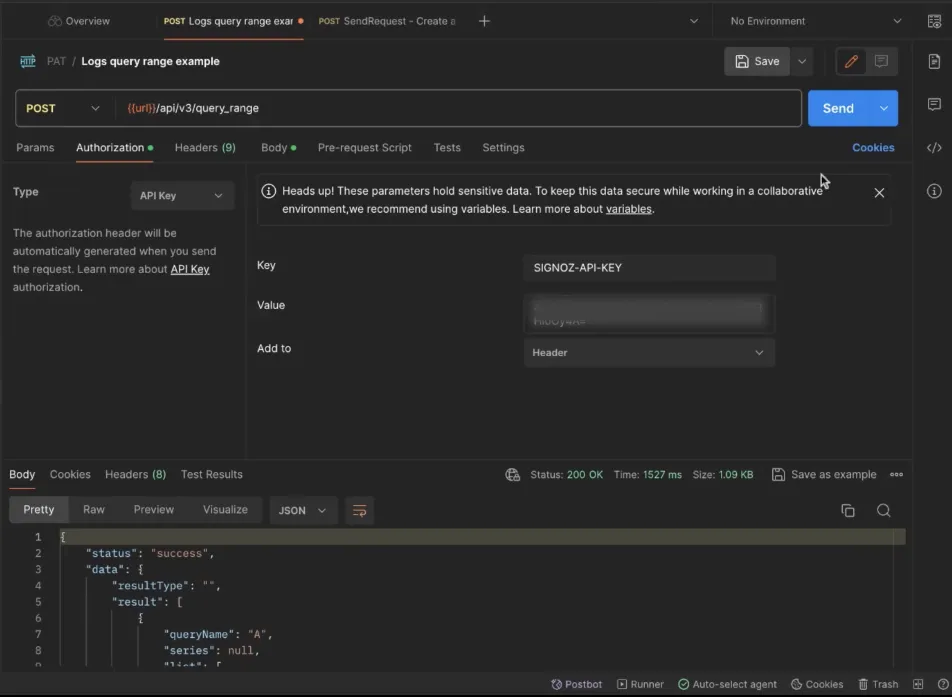

Let’s suppose you want to fetch logs from SigNoz for some use-case. You can find docs about using Logs API and Trace API. Using access tokens with the right permissions, you can fetch your required logs.

Step 1: Go to Access Tokens tab under Settings, and click on + New Token.

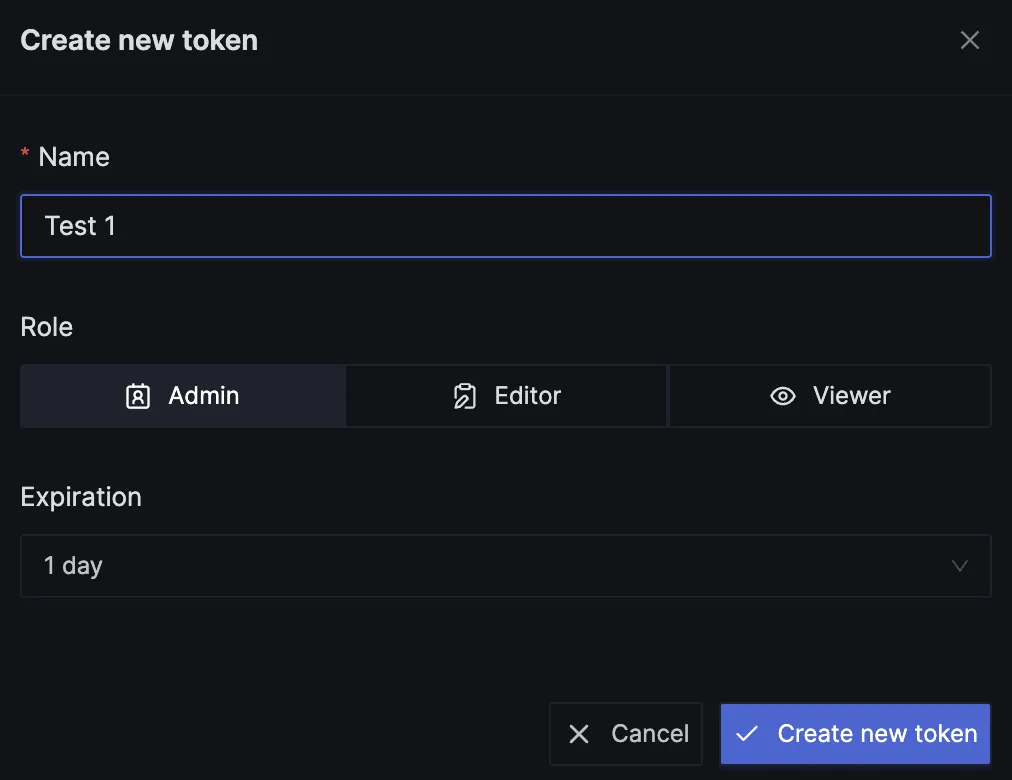

Step 2: Give a name to the token. You can select one of the three roles: Admin, Editor, and Viewer based on your requirements. Set an expiration window and click on Create New Key. You can also edit the token later to change the role and the name of the token.

Step 3: Using the relevant payload, you can fetch logs based on your requirements using the access token generated.

You can also create alerts and dashboards programmatically. A Viewer level role won’t be able to create an alert. You can create alerts using Editor and Admin level access.

Improving Onboarding in SigNoz

Having clear documentation and seamless onboarding is critical to providing a great developer experience. Developers don’t want to go on calls, and they judge a tool based on its onboarding. We have learnt a lot from our users and those learnings forms the backbone of SigNoz's onboarding flow.

Approach to Effective Onboarding

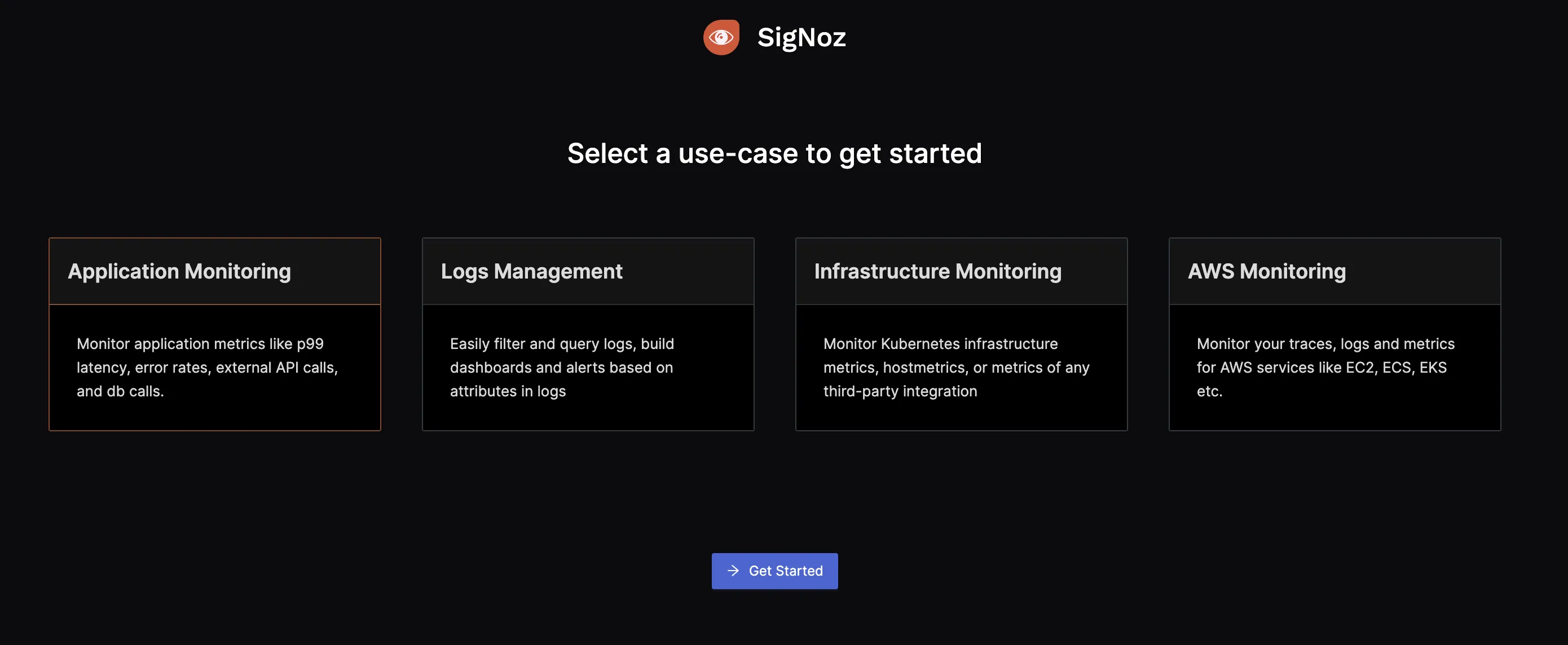

Our onboarding process starts with a clear understanding of the user's intent. By presenting use cases upfront, we guide our users directly to the solutions relevant to their needs at first glance.

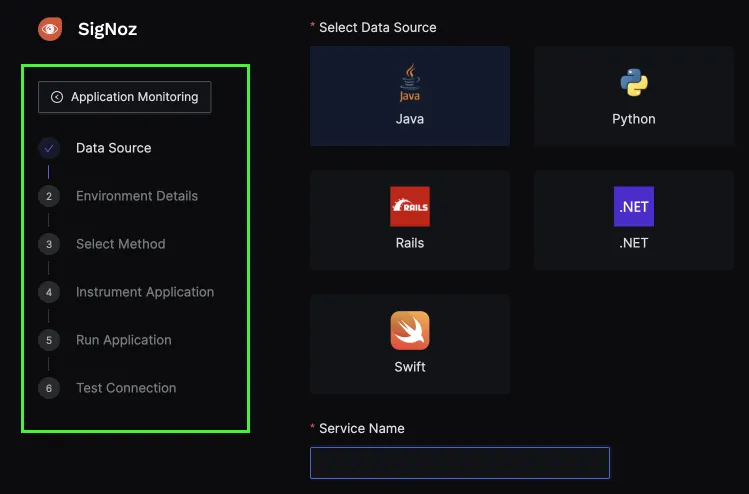

To minimize confusion and enhance usability, we have a step-by-step onboarding flow for each use case. This approach allows users to progress through the integration process without having to worry about what comes next, providing quick wins and a seamless transition from one step to the other.

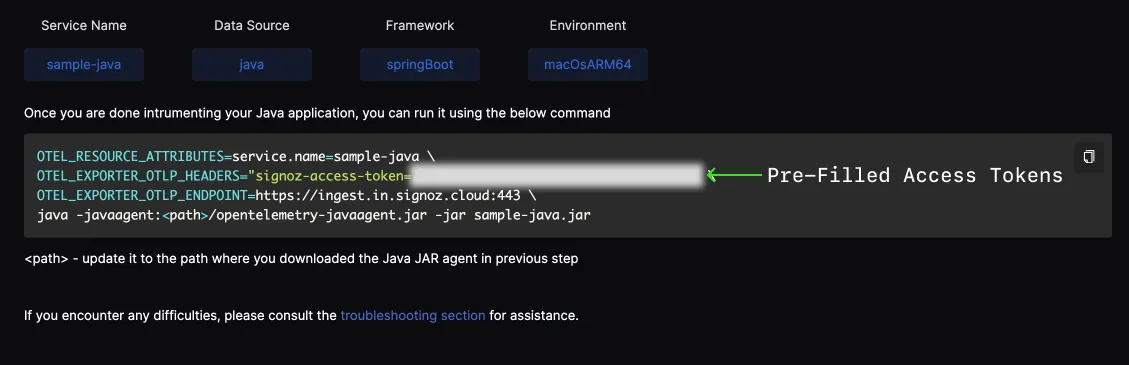

Our onboarding involves config files and environment variables. Our aim is to make the integration process frictionless by using pre-filling code snippets with necessary variables. It reduces the need for context switching and searching for information, allowing developers to focus on the task at hand.

In the coming weeks, we are planning to increase the number of use cases that appear in our onboarding flow as well as improve documentation on our website. We also plan to build integrations and pre-built dashboards to help users get start quickly.

A Chat with SigNoz CTO, Ankit Nayan

Pranay sat down with Ankit to discuss a lot of interesting things about SigNoz, OpenTelemetry, and Observability. Below is the rundown of things that they discussed.

Evolution of SigNoz

When we launched, we started out with a complex architecture that included Kafka and Druid. However, we quickly received feedback on the need for a more accessible solution. Our users wanted to try out the product quickly. This led to a pivotal shift towards ClickHouse, significantly simplifying the setup and boosting adoption. The focus was on building a minimum viable product (MVP) swiftly and iterating based on user feedback, laying the foundation for SigNoz's growth and evolution in the observability domain.

The next phase at SigNoz involved a significant emphasis on feature building and understanding market needs that were previously underserved. The team focused on developing solutions that provided more value to users, driven by direct feedback from companies adopting SigNoz.

The adoption of ClickHouse and the growing popularity of OpenTelemetry were particularly notable, as they not only shaped the technical direction of SigNoz but also influenced its community adoption. So it was very interesting to see the growth of SigNoz along with two other open-source projects.

Open-Source and Community Feedback

We've always believed in the power and potential of open-source software. The feedback loop that open source fosters is invaluable. Through it, we've been able to rapidly iterate on our features and address any issues much faster than we could have in a closed-source environment.

In essence, open source is at the heart of what we do at SigNoz.

Why is OpenTelemetry the future?

At SigNoz, we believe OpenTelemetry is the future, and SigNoz has been built to be OpenTelemetry-native from day one. Here are a few reasons why we believe OpenTelemetry is the future.

Vendor-Neutrality: As software developers, we want as much control over our code as possible. OpenTelemetry's vendor-neutral approach ensures that developers are not locked into a specific observability platform.

Future-proofing Your Stack: By adopting a standardized, open-source framework for telemetry data collection, you're essentially future-proofing your application's observability stack. It allows for easier updates, integrations, and scalability as new technologies and platforms emerge.

Broad Adoption and Community Support: OpenTelemetry has garnered broad support from a wide range of companies and has a rapidly growing community. We were surprised to see even early-stage companies having a few members in the engineering team adopting OpenTelemetry as their observability setup.

Enabling Advanced Use Cases: OpenTelemetry is enabling new and advanced use cases. We know of Tracetest, which uses OpenTelemetry traces to enable more robust testing frameworks. We have also collaborated with Traceloop, which is working on providing observability for LLM applications using OpenTelemetry.

New software emitting data in OTel formats: We’re seeing more and more upcoming software emitting data in OpenTelemetry format for their observability. For example, in the future, if we want SigNoz to be monitored by external software, we will emit data in OpenTelemetry format.

Correlation among Signals using OpenTelemetry

OpenTelemetry has enabled the effective correlation of signals—logs, metrics, and traces—through a unified approach to telemetry data. OpenTelemetry uses resource attributes, which are key-value pairs that describe the source of the telemetry data. These attributes are consistent across logs, metrics, and traces, providing a common reference point that can be used to correlate data across these different signals.

OpenTelemetry defines semantic conventions, which are standardized naming conventions for attributes and events. These conventions ensure that data is described in a uniform way, making it easier to correlate related pieces of information across different signals and even across different systems.

That said, it is essential to have a single tool that analyzes all the signals captured by OpenTelemetry. Having a single pane of glass for all observability signals enhances the ability to correlate data across logs, metrics, and traces. This unified view enables more effective and efficient analysis, allowing for quicker identification of issues and understanding of system behavior.

Future Trends in Observability

As the CTO of SigNoz, Ankit is leading the efforts to build the next-gen of observability products. So it was interesting to get his views on future trends of observability.

Machine Learning and AI

Machine learning and AI can be employed to detect anomalies within observability data automatically. We can see AI helps us move beyond static dashboards to dynamic, AI-generated views that adapt based on detected patterns and anomalies.

Observability for Cost Optimization

Another future trend can be the use of observability data not just for performance monitoring but also for optimizing costs. Companies will increasingly leverage observability tools to make data-driven decisions about where to invest in infrastructure or make cuts based on the insights derived from their observability data.

More shift towards hosting data on-premise

One of the primary motivations for moving data hosting in-house is the potential for significant cost savings. Some organizations are willing to do it to have greater control over their data, addressing privacy, security, and governance concerns more effectively.

For organizations with predictable workload patterns, on-premises hosting can offer more stability and efficiency. The initial allure of the cloud's scalability and convenience is being reconsidered in light of cost, control, and privacy considerations, leading some companies to reevaluate the benefits of on-premises hosting.

What’s next?

So that’s a wrap on our launch week. It was so much fun preparing for it and producing videos and content. The whole team had a fantastic time, so we look forward to doing it again soon. It also provided us with a great opportunity to interact with our community.

We will continue on our mission of democratizing observability for engineering teams of all sizes. Stay tuned for deeper features around OpenTelemetry and taking open-source observability to the next level! 🚀